For the first few weeks in our GSP 420 course me and my group have begun the "production" phase where we're beginning to work on our specified cores; Main framework Core, Graphics Core, and AI & Collision Core based on the class diagrams we've developed for them. To begin the core that I'm in, I started off doing the spriteContainer class and Tile class. The way me and my group wanted our game engine to be was as universal as possible, so the idea I had for the spriteContainer class was to have a storage where we can place Texture2D objects in and whenever we want to grab those images we can just invoke the variable from the class. To start this off as somewhat a template I created various Texture2D variables giving them a getter and setter such as; player, enemy, block1, and exitTile.

public static Texture2D player{get; private set;}

public static Texture2D enemy{get; private set;}

public static Texture2D block1{get; private set;}

public static Texture2D exitTile{get; private set;}

The reasoning for them having a getter and setter is simple, the name says it all. To get the value of the variable and or be able to assign something to the variable. Purposely having the setter private so only the spriteContainer class can assign the variables to something and any other class is inaccessible to doing so. After creating a couple of variables I created a function that loads the content into specified variables called Load(ContentManager content). So at the moment if we invoke these variables outside the class, nothing happens. The purpose of the Load(ContentManager content) is to be able to assign these Texture2D variables to images using XNA's content.Load. In this function I begun assigning these variables to be able to load an image by doing:

player = content.Load<Texture2D>("spriteContainer/player");

enemy = content.Load<Texture2D>("spriteContainer/enemy");

block1= content.Load<Texture2D>("spriteContainer/block1");

exitTile = content.Load<Texture2D>("spriteContainer/exitTile");

What is happening is where assigning Texture2D variables like player, enemy and or exitTile to be able to load .png files (because we're casting Texture2D) from the folder "spriteContainer" and specifying the actual file name. So now when we're outside of this class we can just do spriteContainer.player instead of doing it the long way; content.Load<Texture2D>("spriteContainer/player"); all the time. The best part about this class is considering this is a game engine... For any game speaking, we can just continuously create new variables and add them into the Load function to store them up in the system so we can use them universally. Thus finishes the spriteContainer class....Until we make a game!

After completing the spriteContainer class I decided to tackle down the Tile class. The purpose of the Tile class is simple...It's basically just identifying something to being a Tile in a .txt file. So this tile can be a variable from spriteContainer or just a simple string name with a collision. Now thanks to actually doing a class diagram for the graphics core I know there's going to be an association with Tile and the levelEditor class. So Tile is going to have somewhat a significance in the making. The way I thought of the Tile class was to identify something being a tile and deciding what collision type it was. So to begin this I created an enumeration called collisionType.

enum collisionType{ Passable = 0, Blocked = 1, Platform = 2, Trigger = 3}

The enumeration is pretty straight foward; to determine what collision type a tile has. If a tile has a collision type of passable, on a .txt file the player can pass through it. If the tile is Blocked, the player cannot pass through it. If it is a platform, the player can jump on it but keeping in mind the tile does have bounds. If the tile has a collision type of Trigger then that basically means the tile can cause an event. So this could be an item event for example. After doing this I created a Texture2D object called texture and an object of collisionType called collision. Then to be able to manipulate the size of an image/tile I did

public const int Width = 32;

public const int Height = 32;

public static readonly Vector2D Size = new Vector2D(width,Height);

I did this because in the levelEditor class I know there will be an association occuring in the Draw function where I can place Size in. So if I wanted to change the size of a tile I could always change the value of Width or Height. To continue this I created a simple constructor called;

Tile(Texture2D texture, collisionType collision)

Inside it I basically just assign the variables to eachother:

this.texture = texture;

this.collision = collision;

Doing this completed the Tile class. Now all that's left to complete are four classes; camera, levelEditor, Animate, and Render! Can't wait to begin on the Animate class.

Monday, November 24, 2014

Monday, November 10, 2014

Creating a Game Engine: Class Diagram and Requirements List

So to start this off, I am currently taking GSP 420 which is a course built on creating your own game engine. I have to say, before this class I've been eager in wanting to take this course because I've always wanted to make my own game engine. Into two weeks of the course me and my group have decided to create a game engine based around a platformer where we will be building it off of C# and XNA. The group I have is divided up into three cores; Main Framework Core, Graphics Core, as well as AI and Collision Core. Currently I am a part of the Graphics Core and I'm pretty comfortable with that only because I enjoy working with graphics! So to begin with our Game Engine we made sure to do some analysis planning through creating a class diagram and requirements list specific to our core. Being able to create a class diagram as well as a requirements list is a great way to get an idea of how the core's going to be structured as far as the system goes.

As you can see with the above picture we have quite a few things going on with the graphics core and that's just only the graphics core. Since we want things to be almost pretty universal my thought behind this was to create a class which holds all sprites and this class was called spriteContainer. With this class other classes can just load a texture on screen just by invoking a specific variable such as player or enemy1 such as the levelEditor class. The levelEditor's purpose is actually what the name says, it edit's the levels properties as well as reads .txt files. In this class we can grab textures and set them to be a tile that blocks the players path, or a tile which determines the sprites exit point of a level. After setting up everything as pleased we can just draw all "tiles" based on the level index read and the file streamed. The cool thing with this class is the ability to read .txt files. So let's say we set a certain sprite to have a character of "P". Placing P in a .txt file will actually tell the compiler oh hay this is a platform. As you can see with the picture above there is an association going on with levelEditor and Tile. The purpose of tile is to take in an image from spriteContainer class where you can determine if the image is a platform, has a trigger, is visible or invisible. Now since our game engine is a platformer and we do want to be able to universally have the engine create an animation based off a sprite sheet we created an animation class called Animate, which associates with the spriteContainer class. So in Animate, it will take into account any sprite sheet, create a cell which shifts left or right, as well as determining if the animation loops or not. Now just so I don't get to into detail, it all comes down to the Rendering class. The whole purpose of this class is to Draw, which will draw all tiles based on the level in level editor, as well as render and update the screen coming from ScreenManager. Thus finishes the class diagram for our Graphics Core.

Now it all comes down to the requirements List! I actually enjoy doing the requirements list a lot because it's great to use as a check off sheet such in a way when you begin testing you view your requirements list and see if the system follows all of the requirements. Usually when it comes to creating a requirements list, you want to get as thorough as possible because if there's a bug in your system and it has nothing to do with your requirements then that can go on the programmers fault. So when you look at the requirements list above, it doesn't look as thorough only because this is part of only the graphics core. The way me and my partner did it was pretty organize; labeling things by a requirement I.D as well as giving each description a status so we can use it as a check off sheet. When doing the requirements list, we did try to get as detail oriented as possible so in the instance when we test the engine we can make sure everything is running the way it should.

|

| Graphics Core |

|

| Requirements List |

Overall I'd have to say when it comes to creating some sort of system, whether its a software, program and or game engine...It's probably wise to do some planning before hand rather than diving into the production phase because then you might run into more errors versus if you had plan things out. A great way to actually plan things out can come from creating Class Diagrams, Use Cases, Requirement lists, as well as a Risk Assessment Table.

Tuesday, November 4, 2014

Getting started with FX Composer 2.5

One of the things I enjoy a lot as a programmer is being able to handle things on the Graphics end. This can come from many things such as creating a user interface for games or handling 2D and 3D graphics and implementing them through a game engine or Direct X. Staring this new course excites me because we begin to approach more of the three dimensional aspects of Direct X such as messing with Shaders. One of the things we were first introduced to was something called FX Composer and I'd have to say the professor wasn't lying, it seriously looks like a combination of 3ds Max and a programming language combine together! FX Composer is basically a tool where developers on the graphics end can create or modify shaders through the use of creating an Effect file. The Effect file is then associated with a Direct3D program where a programmer can utilize that shader on a 3D model. With the use of DirectX Standard Annotations and Semantics (DXSAS) programmers are allowed to edit values of Annotations on a "host application" where they can actually modify the shader for their own use. So in other words we can utilize a shader on two different objects and have two different looks to it through tweaking the parameters of annotations.

As you can see with the picture above, this is FX Composer and it really does look like a combination of 3DS max and C++! The middle window is the Editor or where all the programming lies where as the top left window is the material window. The top right window is the propeties window which gives you the properties of a material and the bottom right is the perspective window where you can see a mesh with the shader/material applied to it. So to start this all off, looking at the Phong effect file we can see quite a few things such as Lamp0Pos, Ambient, Bump, Ect. Under these you see annotations such as UIName, UIMin, UIMax and so on. These annotations have values which can be messed with through FX Composer or even through C++ which is great because that means you can dynamically change these values!

So the question you may ask yourself is how do you grab these annotations in C++? Well one way you can do this is through determining the annotations data type and then create a variable with that same data type and another variable which is a handler. So for instance, in this project we worked with the Gooch Bump Shader type. The shader has things where you can change it's warm color and cool color and it's data type dealt with color. So what I did was create four variables of type D3DXCOLOR and D3DXHANDLE and named it warmColor, coolColor, hWarmColor, hCoolColor. In the function where we load the effect file of the shader I grab the two handlers and had them call getParameterByName which took in the names of the semantics. Doing so basically said... okay we're going to assign these handlers to the semantics allowing you to edit their values as you please.

Thus leading me to the Renderer Function where I messed with the parameters setting warmColor to D3DXCOLOR(0.7f, 0.03f, 0.01f, 1.0f) and coolColor to D3DXCOLOR(0.01f, 0.05f, 0.25f, 1.0f). After setting the colors you have to set in the values which then you take into account the handler and the variable which you stored the color. With this I did

m_pEffect->SetValue(hWarmColor, &warmColor, sizeof(D3DXCOLOR));

m_pEffect->SetValue(hCoolColor, &coolColor, sizeof(D3DXCOLOR));

Which gave me the picture below:

For a first assignment I'd have to say this was quite fun and I look foward to what else FX Composer brings to the table!

|

| FX Composer |

|

| Effect Skeleton |

|

| Renderer |

m_pEffect->SetValue(hWarmColor, &warmColor, sizeof(D3DXCOLOR));

m_pEffect->SetValue(hCoolColor, &coolColor, sizeof(D3DXCOLOR));

Which gave me the picture below:

|

| Result |

Sunday, June 22, 2014

Implementing an Item Pickup through Unreal Engine with Kismet

When some people hear about programming they may get scared away or even lost when talking about logic. Some people may even be intrigued to conversate just about logic itself. As days go on, programming is actually becoming a more easy thing to do and understand as we consider the amount of alternatives to actually programming on a programming language. For example, one of the things taught as a programmer of myself was understanding flowcharts. Flowcharts are just like pseudocode where you're basically writing out your logic without the syntax, the only difference is; flowcharts are more diagrams to help you out visually. Nowadays some programming languages like c++ on visual studio actually have things like UML diagrams which are similar to flowcharts in order to understand logic better, even game engines like Unreal have an alternative like this. With Unreal Engine 3, a programmer can create logic in two ways; scripting with Unreals Unreal script, or using Kismet which can be considered an alternative in writing down logic in a flowchart manner. Having used Unreal Engine 3 for quite some time and kismet honestly has helped me learn that you can approach logic with anything in many different kinds of alternatives. For example, recently when working on my midterm course project I had to create logic for my group game called Ruin where the player can be able to pick up Journals. So something very simple.

Well when you think about it, how would we create logic for being able to pick up something on the floor in game. Well taking things step by step helps. What we want the player to be able to do is go up to the Journal, hit "U" and be able to pick it up and be placed in their inventory. What we DON'T want the player to be able to do is go any where in the level, press U and the journals picked up. Now that would be pretty bad. So considering that we know there should be an area where the player can go to in order to be able to pick up the journal and an area where they can't pick it up. Fortunately, thanks to Unreal Engine, they have a thing called Triggers. In a way, triggers can be seen as a boolean event. So if the trigger is touched, do this; else do this. The great thing about tiggers is that you can basically set up a parameter where when the player goes in, he's "touching" the trigger, and when the players outside the trigger he's "untouching" the trigger, so we can use this to our advantage.

Seeing this now what we can do is have a cause of events taking place when the player enters or leaves the trigger. So considering how I don't want the player to be able to pick up the journal from the gecko, only in the triggers parameter, I actually created a bool variable by the name of "bCanPickUp" and set it to false upon level loaded. The point of this bool variable is to determine when the player can and cannot pickup the journal. Now for this one level we actually only had two journals so I made sure to create two more bool variables called "bIsBook1" and "bIsBook2" setting it to false upon level loaded. So you may be asking yourself how come I made three bools. Well picking up one journal can be easily done with one bool variable however, when we talk about more than one object being picked up with the same exact key we have to do more than one check to prevent the player from breaking the game.

The two new bool variables I created bIsBook1 and bIsBook2 purpose are to check whether or not when the player hits U, are they picking up the first journal or second journal. After doing this I made sure to set up my triggers in the level right next to the journal. Upon clicking both triggers in the level I went into kismet hitting the right mouse button and going to New Event Using Trigger > Touch. Doing so placed two trigger events onto kismet. So after pre planning the logic I knew when the player touched one of the journals, than that player would be allowed to press U and pick up the journal. So upon the first trigger being touch I set bCanPickUp to true and bIsBook1 to true. So when the player touches trigger one, they are now allowed to press U and pickup something and what their picking up is Book1. Now the same thing I did for trigger one I did for trigger two.

After doing this takes us to the journal checks now. The logic so far for the journal pickup is upon level loaded bCanPickUp, bIsBook1 and bIsBook2 are set to false and upon trigger touch either or may become true. Well although of this, nothing is happening. All I did was do a variable initialization and re assign the variables. To actually allow the player to pick the journals up we have to do another event which is a keypress event. To get to the key/ button press I went to New Event > Input > Key/ button press. With this event we can actually make it to when the player presses U he will pick up the journal. In the Key/ Button press node I added an input name by hitting the green plus sign and putting in U as the key to trigger the event. I also made sure to set the trigger count to zero to allow the player to be able to press the key as much as they want. After creating the new event I begun doing the checks. Considering there's more than one journal we have to do two checks. A check that determines if they are in the parameter to pick the journal up, and another check to determine what item they are picking up. Considering this, I made three compare bools found by going to New Condition > Comparison > Compare Bool. With one of the compare bools I connected its input to the output UV of the Key/ Button press event. So when the player presses U we compare a bool. This bool will be bCanPickUp. So if bCanPickUp is true we then compare two more bools which is bIsBook1 and bIsBook2. With this I connected the input UV of the two last compare bools to the output of True of the first compare bool. On the bottom of the compare bools I attach the bool variable to the correct location to signify what we are testing.

Doing this for journal one we have it when the player enters the triggered parameter we set bCanPickUp to true allowing them to press E and be able to pickup something. Well that comparison takes us to another check where it checks which journal they are picking up. So for journal one, if bIsBook1 is true, we destroy both that journal and that trigger to make it seem as if the player picked it up. Now after doing that feels good because the journal pick up is literally almost done......if you don't take into consideration that they're bugs the players can stumble upon. Well now when you think about it, the player can just keep pressed U over and over again destroy the mesh and trigger even when it's already destroyed. Now imagine if the journal pick had sound upon picking up. The sound would constantly play each time the player hit U. To prevent this what I did was in the compare bool upon bIsBook1 being true I set the variable bIsBook1 and bCanPickUp to False. So now when the player presses U nothing happens at all. With this first logic for the first journal I did with the second journal, the only difference is, when we go to the second comparison... when bIsBook2 == true we set bIsBook2 == false, bCanPickUp == false and we destroy that mesh and trigger. After doing this we got the pickup working but it doesn't seem really attractive. What I did after this was actually implement a flash movie I created in kismet so when the player enters the trigger,we play the movie that appears on the top right corner and when they leave the trigger, we stop playing it. The flash movie I made was of a open book popping in and out. Placing this into the logic was very easy.

In order to place a flash movie like a journal pop up all I did was go to New Action > GFx UI > Open Gfx Movie. Upon touching the trigger we open the flash and upon leaving the trigger we close it. So what I did was connect the touch UV with the input In UV of the open Gfx Movie node. In the Open Gfx Movie node properties I placed in my flash SWF by adding it in through the content browser by hitting the green arrow. After doing that I created a player variable and a object variable by going to New Variable > Player > player and New Variable > Object > Object. With these variables I connected them to to UV's Player owner and Movie Player. With the movie closing I went to New Action > Gfx UI > Close Gfx Movie. With this node I connected its input to the untouch Output of the trigger and also connected the movie player to the same movie player variable Open Gfx Movie has. Thus allowing when the player enters the trigger an swf plays and when they leave the trigger the swf closes.

Believe it or not, even after doing this I didn't consider the pick up done. I actually went on and added sound to the pickup and a particle effect to make things seem more intriguing to see. Implementing sound with the pickup logic was pretty easy to do. All I did was in the second compare bool for both triggers; bIsBook1 and bIsBook2 I added a sound node by going to New Action > Sound > Play Sound and added my sound through the content browser by hitting the green arrow. After creating the sound nodes I connected the output of the comparison bool True to the input Play of the sound node. Now, just to make things safe I actually connected the finished ouput of the sound node to stop input of the sound just to make sure when the player pressed U again they won't hear a sound. Although I fix that upon setting the bools to false upon picking up the journal.

After doing this I actually decided to add a particle effect at the location the player picks up either or journals. So when we destroy both the trigger and static mesh, we spawn an actor emitter of our particle. For my particle I created some clouds that spread apart on the floor. In order to get the particle to spawn and at the specific location I created an actor factory found in New Action > Actor > Actor Factory. In the properties of the actor factory node I dropped down the different types of actor factories we could choose from by hitting the blue downward arrow and selecting actor factory emitter. Still in the actor factory properties I drop down the Factory below the blue arrow giving us a place where we can place a particle system in. Grabbing my particle system from the content browser, I hit the green arrow to place it in the actor factory. Upon doing this I placed the Spawn Actor input UV and connected it to the true output UV of both compare Bools bIsBook1 and bIsBook2 for both triggers. So basically now upon picking up the journal we can now spawn the cloud particles but where is it? To get the system to be placed at the specific location of the book I connected the Spawn Point UV to the variable object of the static mesh, allowing the particle to play at the location of the mesh when destroyed.

Well when you think about it, how would we create logic for being able to pick up something on the floor in game. Well taking things step by step helps. What we want the player to be able to do is go up to the Journal, hit "U" and be able to pick it up and be placed in their inventory. What we DON'T want the player to be able to do is go any where in the level, press U and the journals picked up. Now that would be pretty bad. So considering that we know there should be an area where the player can go to in order to be able to pick up the journal and an area where they can't pick it up. Fortunately, thanks to Unreal Engine, they have a thing called Triggers. In a way, triggers can be seen as a boolean event. So if the trigger is touched, do this; else do this. The great thing about tiggers is that you can basically set up a parameter where when the player goes in, he's "touching" the trigger, and when the players outside the trigger he's "untouching" the trigger, so we can use this to our advantage.

Seeing this now what we can do is have a cause of events taking place when the player enters or leaves the trigger. So considering how I don't want the player to be able to pick up the journal from the gecko, only in the triggers parameter, I actually created a bool variable by the name of "bCanPickUp" and set it to false upon level loaded. The point of this bool variable is to determine when the player can and cannot pickup the journal. Now for this one level we actually only had two journals so I made sure to create two more bool variables called "bIsBook1" and "bIsBook2" setting it to false upon level loaded. So you may be asking yourself how come I made three bools. Well picking up one journal can be easily done with one bool variable however, when we talk about more than one object being picked up with the same exact key we have to do more than one check to prevent the player from breaking the game.

The two new bool variables I created bIsBook1 and bIsBook2 purpose are to check whether or not when the player hits U, are they picking up the first journal or second journal. After doing this I made sure to set up my triggers in the level right next to the journal. Upon clicking both triggers in the level I went into kismet hitting the right mouse button and going to New Event Using Trigger > Touch. Doing so placed two trigger events onto kismet. So after pre planning the logic I knew when the player touched one of the journals, than that player would be allowed to press U and pick up the journal. So upon the first trigger being touch I set bCanPickUp to true and bIsBook1 to true. So when the player touches trigger one, they are now allowed to press U and pickup something and what their picking up is Book1. Now the same thing I did for trigger one I did for trigger two.

After doing this takes us to the journal checks now. The logic so far for the journal pickup is upon level loaded bCanPickUp, bIsBook1 and bIsBook2 are set to false and upon trigger touch either or may become true. Well although of this, nothing is happening. All I did was do a variable initialization and re assign the variables. To actually allow the player to pick the journals up we have to do another event which is a keypress event. To get to the key/ button press I went to New Event > Input > Key/ button press. With this event we can actually make it to when the player presses U he will pick up the journal. In the Key/ Button press node I added an input name by hitting the green plus sign and putting in U as the key to trigger the event. I also made sure to set the trigger count to zero to allow the player to be able to press the key as much as they want. After creating the new event I begun doing the checks. Considering there's more than one journal we have to do two checks. A check that determines if they are in the parameter to pick the journal up, and another check to determine what item they are picking up. Considering this, I made three compare bools found by going to New Condition > Comparison > Compare Bool. With one of the compare bools I connected its input to the output UV of the Key/ Button press event. So when the player presses U we compare a bool. This bool will be bCanPickUp. So if bCanPickUp is true we then compare two more bools which is bIsBook1 and bIsBook2. With this I connected the input UV of the two last compare bools to the output of True of the first compare bool. On the bottom of the compare bools I attach the bool variable to the correct location to signify what we are testing.

Doing this for journal one we have it when the player enters the triggered parameter we set bCanPickUp to true allowing them to press E and be able to pickup something. Well that comparison takes us to another check where it checks which journal they are picking up. So for journal one, if bIsBook1 is true, we destroy both that journal and that trigger to make it seem as if the player picked it up. Now after doing that feels good because the journal pick up is literally almost done......if you don't take into consideration that they're bugs the players can stumble upon. Well now when you think about it, the player can just keep pressed U over and over again destroy the mesh and trigger even when it's already destroyed. Now imagine if the journal pick had sound upon picking up. The sound would constantly play each time the player hit U. To prevent this what I did was in the compare bool upon bIsBook1 being true I set the variable bIsBook1 and bCanPickUp to False. So now when the player presses U nothing happens at all. With this first logic for the first journal I did with the second journal, the only difference is, when we go to the second comparison... when bIsBook2 == true we set bIsBook2 == false, bCanPickUp == false and we destroy that mesh and trigger. After doing this we got the pickup working but it doesn't seem really attractive. What I did after this was actually implement a flash movie I created in kismet so when the player enters the trigger,we play the movie that appears on the top right corner and when they leave the trigger, we stop playing it. The flash movie I made was of a open book popping in and out. Placing this into the logic was very easy.

In order to place a flash movie like a journal pop up all I did was go to New Action > GFx UI > Open Gfx Movie. Upon touching the trigger we open the flash and upon leaving the trigger we close it. So what I did was connect the touch UV with the input In UV of the open Gfx Movie node. In the Open Gfx Movie node properties I placed in my flash SWF by adding it in through the content browser by hitting the green arrow. After doing that I created a player variable and a object variable by going to New Variable > Player > player and New Variable > Object > Object. With these variables I connected them to to UV's Player owner and Movie Player. With the movie closing I went to New Action > Gfx UI > Close Gfx Movie. With this node I connected its input to the untouch Output of the trigger and also connected the movie player to the same movie player variable Open Gfx Movie has. Thus allowing when the player enters the trigger an swf plays and when they leave the trigger the swf closes.

Believe it or not, even after doing this I didn't consider the pick up done. I actually went on and added sound to the pickup and a particle effect to make things seem more intriguing to see. Implementing sound with the pickup logic was pretty easy to do. All I did was in the second compare bool for both triggers; bIsBook1 and bIsBook2 I added a sound node by going to New Action > Sound > Play Sound and added my sound through the content browser by hitting the green arrow. After creating the sound nodes I connected the output of the comparison bool True to the input Play of the sound node. Now, just to make things safe I actually connected the finished ouput of the sound node to stop input of the sound just to make sure when the player pressed U again they won't hear a sound. Although I fix that upon setting the bools to false upon picking up the journal.

After doing this I actually decided to add a particle effect at the location the player picks up either or journals. So when we destroy both the trigger and static mesh, we spawn an actor emitter of our particle. For my particle I created some clouds that spread apart on the floor. In order to get the particle to spawn and at the specific location I created an actor factory found in New Action > Actor > Actor Factory. In the properties of the actor factory node I dropped down the different types of actor factories we could choose from by hitting the blue downward arrow and selecting actor factory emitter. Still in the actor factory properties I drop down the Factory below the blue arrow giving us a place where we can place a particle system in. Grabbing my particle system from the content browser, I hit the green arrow to place it in the actor factory. Upon doing this I placed the Spawn Actor input UV and connected it to the true output UV of both compare Bools bIsBook1 and bIsBook2 for both triggers. So basically now upon picking up the journal we can now spawn the cloud particles but where is it? To get the system to be placed at the specific location of the book I connected the Spawn Point UV to the variable object of the static mesh, allowing the particle to play at the location of the mesh when destroyed.

Thus actually completing the journal pickup for the game ruin

Now in the instance where the player wanted to pick up an item and not destroy it, all they would have to do is attach the mesh to the actor by going to New Action > Actor > Attach to Actor and connecting the mesh to the Attach UV and player to the Target UV. For my group game called Ruin, I actually went this route for one of my artifacts where upon the player pressing U they pick it up and actually carry it. Now seeing how I still used the U key, expanded my checks for the journal pick up and Artifact pickup but still using the same logic.

Overall when you think about it really, this journal pickup logic could've been done in many ways. It could've been done with integers, floats, or characters if we wanted to. I actually went with booleans because I prefer it more and I find it to make logic a lot much more simple. The cool thing about Unreal and Kismet is that whatever you can do in Kismet you can literally do in UnrealScript. So if I really wanted to, I could've scripted this logic myself instead of doing it through kismet. It all depends on what you prefer honestly, personally I would've done this through scripting if I had more time only because I prefer programming things out rather than using diagrams. However, like I said at the end of it all it's what you prefer.

Tuesday, June 17, 2014

Setting up an AnimTree for your Custom Playable Character

Many of those that are familiar with Unreal Engine and any type of modeling program may be familiar with AnimSets as you import skeletal meshes into your levels. For those that aren't familiar, AnimSets is basically a set of animations a skeletal mesh can have. So for example, let's say we have a character model where we have an animation that the character is walking, upon exporting the skeletal mesh with the animation to the game engine Unreal, we get two things; the skeletal mesh, and an AnimSet of that animation. Now AnimSets can be very useful, for one you don't have to constantly re import an AnimSet for each animation you make in a modeling program. You could actually just import a new animation inside the AnimSet which makes scripting and the manipulations of AnimTrees much easier. Two, if you ever wanted to you could make animations in real time for your game using these AnimSets in Unreal's Matinee or even use AnimSet's for Cutscenes. Now with this being said, one of the main things AnimSets are good for are that you can implement them in a AnimTree. So you may be asking what is an AnimTree. Well for those that may not be familiar with the term, AnimTree's can be seen as a hierarchy tree that consists of different kinds of animations. So for some programmers out there...When Scripting your own custom playable character, having an AnimTree is pretty important. Without an AnimTree, you won't really see animations of your character going on in real time, such as the players moving animation. So if you were to script a playable character for a level or your game without an AnimTree, your player would definitely be able to move but animations won't be playing which is pretty unappealing. Recently from one of the courses I've been taking, I had to create an AnimTree for one of the projects I was working on with my group called GirderJump. Basically you play as a construction worker who so happens to need to use the bathroom badly that you start jumping over obstacles such as girders to get to a porter potty. For this short game, I had to create an AnimTree for our playable character so that when the player actually moves the character, we can see animations playing.

To start this off, I begun by actually creating a new AnimTree calling it "charaAnimTree" in my own package. Upon opening up the AnimTree your given basically everything blank, which is good if you like to start things new. So when I first begun making AnimTree's which was a course ago before me taking GSP340, I was lost. I honestly did not know where to begin and I remember being confused on why my skeletal mesh wasn't appearing in the previewer window. So to fix this was actually easy, all you have to do is click your AnimTree node and in the properties panel theres a preview mesh list. All you have to do is hit the green plus and insert your skeletal mesh in "preview Skel Mesh". Now upon doing this you'll get your skeletal mesh in but now you also want the AnimTree to recognize your AnimSet that contains all sorts of animations for the model. So just like what I did with the skeletal mesh, I placed in my AnimSet which was actually right beneath Preview Mesh List called Preview AnimSet List > Preview AnimSet.

Upon me doing this I could now view my skeletal mesh and be able to play animations from that AnimSet. Now as a programmer and person that enjoys making 3D models, when making animations for skeletal mesh you have to think...What kind of mesh is this going to be? A character? And if so is he a playable character or AI? Thinking of things like these really do help out the thought process because then we get to narrow down what exactly needs to be down. For instance, this skeletal mesh was actually the main playable character for my group project called GirderJump. So the kinds of animations we would be needing is a character walking animation for front, back, right, and left, jumping animation, double jump animation if applicable, and down animation. For my game, things were much more simple considering it was a platformer side scroller game. So considering the camera angle, the only movements for the character walking animation I needed were front and back. And honestly, we could use the same animations for front and back. For my game, we actually had double jumping being applicable so there had to be some distinguishing from when the player first jumps to when the player double jumps.

Now after acquiring all animations (being done in 3ds Max) I was able to get starting with the AnimTree. As you can see above, what I started off with was called AnimNodeBlendByPhysics which connected the out UV from the AnimTree to the In UV of the new node. So to first explain things, any kind of model, whether its a static mesh or skeletal mesh can have physics apply to it. We can have walking physics, falling physics, interpolating which many game developers use when messing with matinee, and many more types. The AnimNodeBlendByPhysics which I got from right clicking anywhere in the grey area New Animation Node > BlendBy> AnimNodeBlendByPhysics basically has a set of physics that can be manipulated with your skeletal mesh. So for instance, in the PHYS_Walking UV we could put animations in for the character walking. So when actually playing the game, when the player were to move the physics would be set to walking. If the character jump, the character would have PHYS_Falling. So after having the AnimNodeBlendByPhysics I created a node called UTAnimBlendByIdle which I got from New Animation Node > BlendBy > UTAnimBlendByIdle, which is a node that blends with the state Idle and any type of moving state. So essentially we could connect a Idle state in the Idle UV which is when the player is staying still and add animations to the moving state, so when the player moves. Before going any further I made sure though to connect the PHYS_Walking UV with the input UTAnimBlendByIdle UV and then I created two things; an Animation Node sequence and a AnimNodeBlendDirection. The Animation Node sequence is where we will have our animations in. With our AnimSet in the AnimTree, we could easily call the name of the Animation we have inside the AnimSet in the Animation Node Sequence allowing the animation to work. The AnimNodeBlendDirection is pretty cool, with this node we can have movement in all directions being three hundred sixty degrees. So you'll have UV's for foward, backward, left and right. First, to get the Animation Node Sequence, I went to Animation Sequence > Animation Node Sequence. In the properties for this Node I name the Anim Seq Name to Idle and connected its input to the output to UTAnimBlendByIdle Idle output. So now we have our character in it's stationary state. Then I went to New Animation Node > Directional > AnimNodeBlendDirection and connected the input to the moving output of UTAnimBlendByIdle. After doing that I created two more Animation Node Sequence which are going to be placed in the foward and backward UV of the AnimNodeBlendDirection Node. Due to placing the AnimSet in our AnimTree we can literally just call our animation in the Anim Seq Name and the animation will go in. So for me, the animation for the character walking was charaWalk. Thus completing the character PHYS_Walking.

Now for the project I was working on called GirderJump, we had jumping and double jumping being involved. So in total we were looking at three animations. So we know we will need three Anim Node Sequence. So what I did was create three Anim Node Sequence and name them the animations for jump, double jump and fall which were "charaJump", "charaDoubleJump", and "charaFall". After doing this I created something similar to UTAnimBlendByIdle but for PHYS_Falling which is called UTAnimBlendByFall and I got this from New Animation Node > BlendBy < UTAnimBlendByFall. With UTAnimBlendByFall we have a set of physics for falling, being jump, double jump, down, pre land, land ect. So we can take into account many animations for when the player is in the air. For my game, we only needed to take into account three. With the UTAnimBlendByFall input, I connected it to the output of PHYS_Falling and connected the charaJump node sequence to the UV of up, the charaDoubleJump node sequence to the UV of DoubleJump Up, and the charaFall node sequence to the UV's of Down, PreLand, Double Jump Down, and Double Jump PreLand. Doing this completed the PHYS_Falling. So now the character can walk and fall.

After doing this I had a few things left and the character was done. For one, I had to go to all Animation Node Sequence and in the properties panel I had to check off Playing and Looping and now we're down with the AnimTree.

To actually implement the AnimTree in my game I had to place it into script along with the AnimSets. For this, it could be easily done in a few lines of four unreal classes; GirderJumpGame, GirderJumpPawn, GirderJumpFamilyInfo_Worker, and GirderJumpPlayerReplicationInfo. In my GirderJumpFamilyInfo_Worker I extended from UTFamilyInfo_Liandria_Male and in the DefaultPropeties I added the AnimSet that contains all animations of the character.

AnimSets(0)=AnimSet'GirderJumpModels.Walk'

In GiderJumpPlayerReplicationInfo I extended from UTPlayerReplicationInfo and in the DefaultProperties I added

CharClassInfo=class'GirderJumpGame.GirderJumpFamilyInfo_Worker'

which by doing that we now have all info from family worker, and we can now put replication info into the default properties of the game type.

Continuing this, I went into my GameType which was called GirderJump Game, and in the Default Properties, I added in

DefaultPawnClass=class'GirderJumpGame.GirderJumpPawn'

PlayerReplicationInfoClass=class'GirderJumpGame.GirderJumpPlayerReplicationInfo'

which takes into account now, my pawn class, and family info class.

Now we have mostly everything but we're missing one thing, which is the initialization for the character and the AnimTree. This, was simple. All I had to do was in the DefaultProperties of my pawn class;GiderJumpPawn. I added in these three lines:

Begin Object Name=WPawnSkeletalMeshComponent

AnimTreeTemplate=AnimTree'GirderJumpModels.charaAnimTree'

End Object

What I did was create an object by the name of WPawnSkeletalMeshComponent and inside this object I initialize the AnimTree for the character. Thus doing this allows for my character animations to work in real time when playing.

Friday, June 13, 2014

Creating a patch of grass in 3ds Max

Throughout my years of playing video games I've come to observe many things a game holds, such as the environment. Looking at a games environment I've come to see what brings the scenery to life and what gives the scenery purpose. Some things being static meshes, lighting, sound, and even animations occurring in the scene. One game I played that really intrigued me with their environment was Final Fantasy 13 when you're in Gran Pulse. I found the environment quite fascinating due to the ways of how they went about doing it. For example, if you were to look at their terrain for Gran Pulse, the grass is made up of a material that has a grass texture in it. Now imagine, if you were to play a game that has an outdoor environment and the only grass you saw was coming from a textured material, it would be pretty unrealistic. The reason being is because the texture material on a terrain isn't 3 dimensional. There has to be more than that on a terrain to actually enhance the terrain to bring it to life. Playing Final Fantasy 13 I notice they didn't just have a textured material on their terrain for Gran Pulse but they also had a deluge of models on their terrain, one being patches of grass.

Having patches of grass on a terrain, personally I feel enhances the scenery more because it brings an outdoor environment such as a terrain, more to life. From seeing this, it really made me wonder how they went about modeling the grass. I actually thought it would be quite difficult at first because of the bend and twist a patch of grass may hold. Recently, while working on models for my midterm project I decided to take the opportunity to model a patch of grass considering it would fit the scenery for my project. While modeling the patch of grass, I've come to realize how easy it actually is going about doing this.

For starters, you really don't have to do box modeling nor nurbs for this. Your best starting off point is to begin with a planar because a patch of grass really doesn't have depth. Especially considering if its going to be in a game pretty small. When I was modeling the grass what I did was start of the planner with eight width segments. Having segments or edges of the width of the planner will allow the model to bend. Upon converting the geometry to editable poly I decided to weld the top two vertices together, creating one tri polygon. The reason why I did this was to create almost a sharp point at the top of the grass.

After doing this I selected all polygons on the model and went to modifiers tab>bend. Doing so creates a bend in the model. Now the cool thing about this modifier is that we can bend this model in the x, y or z axis. We can increment or decrement the angle of which the bend occurs, or even the direction of the axis it bends. It's pretty cool because you can get really creative with this. For me, I decided to bend it about the x axis.

When you look at a patch of grass, you may notice that the patch is made up of more than one blade. So after creating this bend for this single blade I actually grabbed one of the axis with the translation tool and held shift and moved it. Doing so gives you the option to create a copy, instance or reference. Considering this a patch of grass, I went with a copy. Now after creating a copy of the same blade the bend modifier was still active on the previous blade, even on the new copied blade. Doing it this way gives you way more control and saves a lot more time rather than collapsing the stack and hitting the bend modifier again. Now one of the cool things with the bend modifier is they give you something called upper limit and lower limit. With upper limit and lower limit, you can make things seem more realistic with your bend of the grass. Think of it like this, both upper limit and lower limit have a threshold. Once that threshold is met for the upper limit, the upper part of your model and only the upper part, begins to bend. Same thing for the lower limit. For some blades of grass, the bending doesn't occur all the way, the bending occurs at the top of the model. With the copy blade I created, what I did was play with the upper limit after hitting limit effects. Thus allowing me to create a more realistic bend for the copied blade.

Like I said before, you can get pretty creative here and make all sorts of bends. If you wanted to, you could select some polygons on the model and hit bend instead of selecting all polygons. Now if you were to look at a real patch of grass or even just blades of grass, you may notice some have a twist to it. The cool thing is, 3ds Max actually has a twist modifier. So what I decided to do was create a copy of the copied blade and go to modifier tab>twist. The twist modifier is very similar to the bend modifier, the only difference is that you're twisting the model and not bending it. For the new copied blade, I played with the twist a little bit on the x axis, adding a limit effect to it to both the upper limit and lower limit.

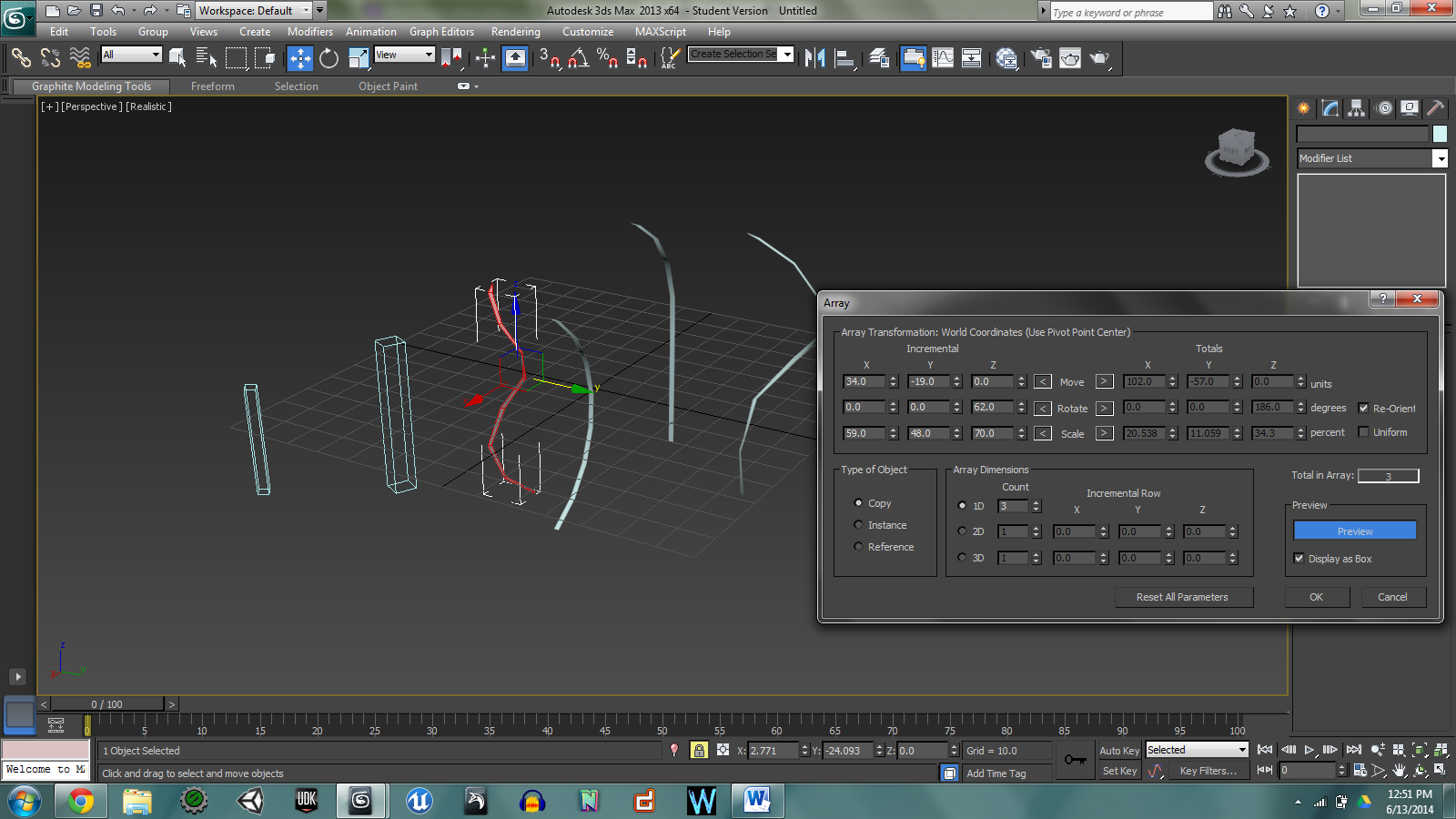

After doing this I grab the original blade model and created another copy of it. The reason behind this was to create a variety of different blades. Upon creating a new copied blade, I did the same thing with adding a twist to it. Now here comes the cool part. 3ds Max actually has an array tool where you're allow to create copies of a model and change the models rotation, translation, and scale of it. After completing four different blades I decided to go to the array tool to create even more variety of blades. Selecting the newest copied blade I created, I went to Tools>Array. Entering the array tool, the first thing I did was hit the Preview button and checked the box display as box. Doing so will allow you to preview multiple copies of your mesh in a box. I checked "Display as box" only because I find it easier to see versus seeing multiple blades of grass. After doing this you can really get creative here by messing with the translation, rotation and scaling of the models. But before actually doing so, I made sure to check "Copy" under the "Type of Object" and then begun messing with the different kinds of transformations.

After achieving the look I wanted with the array tool for the last copied blade I made, I did the same with the previous copied blades I made. Doing so gave me a deluge of blades. Once getting all different variety of blades, I begun moving them all close together to create a patch of them. After finalizing the location for all blades I selected one of the blades and collapse the stack, allowing me to keep all modifies on the blade and going back to editable poly. Doing so, I went to the attach tool under Edit Geometry and selected all other models, allowing them to all become one model.

Now you may be asking, how come I didn't UV map this? Well depending on what kind of texture your going to be placing on a model really depends whether or not if UV mapping is necessary. For example, placing a plane texture like green alone really doesn't require any UV mapping due to stretching of the texturing not being noticeable at all. However, if we really wanted to, we could make the patch of grass more realistic. Before actually using the array tool or even creating copies of the blades, I could've UV mapped one blade and then created copies and used the array tool to create copies. Upon exporting the model into Unreal Engine having all models attach to each other, we could make the grass even more realistic by adding some glossiness to the material, in the material editor.

At the end of it all, it really does depend on what look you're trying to achieve. You can really get creative with this through the modifiers, texturing, or even animations. If you really wanted to, you could rig, skin, and animate the patch of grass to make it seem like wind is hitting it which would be pretty cool. After creating this patch of grass, has made me realize how much things can be taken into consideration when either making an outdoor environment or even indoor environment for a game. It just all depends on what kind of value will something like grass, lights or sound may add to the scenery of your level.

Tuesday, June 10, 2014

Creating a simple button with ActionScript

I'm sure we've all been intrigued with at least one video game's user interface before, whether it had to do with a start menu, pause menu or even inventory. For me one company that always fascinates me with their UI is Square Enix. I'm sure most or majority of everyone has heard of the series Final Fantasy. Throughout the years of the series Final Fantasy, the series constantly progressed, especially their UI for in game battle. Recently after taking my simulation class I got familiar with actually creating UI with ActionScript on Adobe Flash and I find it quite fascinating to actually do.

For my current course assignment, I actually decided to create a simple Start Menu that players can interact with. Allowing players to start the level upon hitting the play button, exiting the game entirely upon hitting the exit button, as well as being able to navigate through different frames with a simple back button.

To Start this off, I actually created a box on adobe flash and change the skew of it to give it a different look. Upon creating the box I actually converted the box into a button naming it PlayBtn. Doing so now allows some functions to actually be available to this specific shape such as "on(release)". After converting the shape into a button you can't just leave it like that...To actually allow the player to notice that hey this is a button and it's accessible to me, I actually created a differiantion to the buttons "Up", "Over" and "Down". So you may be asking yoursel, what does this mean. It's very simple, when the button isn't pressed on, nor do you have the mouse over the button, is when the button is "Up". When you hover the mouse over the Button, then its "Over" and when you actually press the button, is when it's Down. So in these frames, you can actually put different things in here

which will allow the user to know when the button is being pressed, when the mouse is over the button, and when neither of these are occuring. So what I did for my button was in the Up, I actually first created text inside the box naming it "Play" to indicate to the player that this is a Play button. After doing so I made an insert keyframe for both Over and Down (allowing to keep the same graphic we have) and then decreasing the original color a little bit for my Down and Over I increased the colors value. Doing so now allows the button to be more noticeable however, as you can the button doesn't take you anywhere. Considering how this simple menu I made was actually for a level I made, I decided to not have this Play button take me to a different frame. Usuaully when you look at a games Start menu, upon hitting Start your immedietly taken to a level. So what I did with this Play button was, click the button>F9 to open up action script and all I did was three simple lines really.

"on(release){

fscommand("Start");

}"

So basically what where saying is, on release of this button (assuming you pressed it), make an fscommand with the string "Start". So now what we can do is, in a game engine like Unreal...We can actually either go into unreal script or kismet and call this fscommand through an event. So to make this pretty simple, I actually did this through kismet where you go to Event>Gfx>fscommand. The event fscommand takes in a Movie Info and a command (being the command we made on actionscript.

So going back to Flash, to actually get this Movie info you want to do two things... One, Save the .fla file in your UDK(Year)>UDKGame>Flash and two, save an swf file in the same directory. To create an swf all you do is go to File>PublishSettings, change the target to FlashPlayer 8 and hit publish. Doing so allows you to actually place your movie in the fscommand event on unrealscript after importing the swf file.

Going back to kismet, now what we could do is use the event LevelLoaded, so upon LevelLoaded we open up a GfxMovie and play that movie. Upon the fscommand "Start" being hit, we close the Gfx movie now allowing the player to play the level. To do this we go to event>LevelLoaded and Actions>Gfx>OpenGfxMovie. In the first roll your able to put in an swf movie, which is where I place my Menu in. After doing so we can connect the UV Level Loaded into Open. To continue this I went to Events>Gfx>fscommand added the movie and command in there and went to Actions>Gfx>CloseGfxMovie and connected the UV's together. So upon playing the game, immedietly your taken to the "Menu" with the button, upon hitting the button you enter the level.

Personally I actually enjoy creating UI a lot and I find it to be the most fun when making a level or game. I guess I can say this thanks to all the Final Fantasy Games I use to play when I was little kid. As you can see with this, this was just one simple button, you can actually get way more creative than this and do a lot more such as creating a HUD.

For my current course assignment, I actually decided to create a simple Start Menu that players can interact with. Allowing players to start the level upon hitting the play button, exiting the game entirely upon hitting the exit button, as well as being able to navigate through different frames with a simple back button.

To Start this off, I actually created a box on adobe flash and change the skew of it to give it a different look. Upon creating the box I actually converted the box into a button naming it PlayBtn. Doing so now allows some functions to actually be available to this specific shape such as "on(release)". After converting the shape into a button you can't just leave it like that...To actually allow the player to notice that hey this is a button and it's accessible to me, I actually created a differiantion to the buttons "Up", "Over" and "Down". So you may be asking yoursel, what does this mean. It's very simple, when the button isn't pressed on, nor do you have the mouse over the button, is when the button is "Up". When you hover the mouse over the Button, then its "Over" and when you actually press the button, is when it's Down. So in these frames, you can actually put different things in here

which will allow the user to know when the button is being pressed, when the mouse is over the button, and when neither of these are occuring. So what I did for my button was in the Up, I actually first created text inside the box naming it "Play" to indicate to the player that this is a Play button. After doing so I made an insert keyframe for both Over and Down (allowing to keep the same graphic we have) and then decreasing the original color a little bit for my Down and Over I increased the colors value. Doing so now allows the button to be more noticeable however, as you can the button doesn't take you anywhere. Considering how this simple menu I made was actually for a level I made, I decided to not have this Play button take me to a different frame. Usuaully when you look at a games Start menu, upon hitting Start your immedietly taken to a level. So what I did with this Play button was, click the button>F9 to open up action script and all I did was three simple lines really.

"on(release){

fscommand("Start");

}"

So basically what where saying is, on release of this button (assuming you pressed it), make an fscommand with the string "Start". So now what we can do is, in a game engine like Unreal...We can actually either go into unreal script or kismet and call this fscommand through an event. So to make this pretty simple, I actually did this through kismet where you go to Event>Gfx>fscommand. The event fscommand takes in a Movie Info and a command (being the command we made on actionscript.

So going back to Flash, to actually get this Movie info you want to do two things... One, Save the .fla file in your UDK(Year)>UDKGame>Flash and two, save an swf file in the same directory. To create an swf all you do is go to File>PublishSettings, change the target to FlashPlayer 8 and hit publish. Doing so allows you to actually place your movie in the fscommand event on unrealscript after importing the swf file.

Going back to kismet, now what we could do is use the event LevelLoaded, so upon LevelLoaded we open up a GfxMovie and play that movie. Upon the fscommand "Start" being hit, we close the Gfx movie now allowing the player to play the level. To do this we go to event>LevelLoaded and Actions>Gfx>OpenGfxMovie. In the first roll your able to put in an swf movie, which is where I place my Menu in. After doing so we can connect the UV Level Loaded into Open. To continue this I went to Events>Gfx>fscommand added the movie and command in there and went to Actions>Gfx>CloseGfxMovie and connected the UV's together. So upon playing the game, immedietly your taken to the "Menu" with the button, upon hitting the button you enter the level.

Personally I actually enjoy creating UI a lot and I find it to be the most fun when making a level or game. I guess I can say this thanks to all the Final Fantasy Games I use to play when I was little kid. As you can see with this, this was just one simple button, you can actually get way more creative than this and do a lot more such as creating a HUD.

Tuesday, June 3, 2014

Manipulating Force and Impulse Actors with Unreal Engine

For those who have used Unreal Engine whether it's the current engine, being Unreal Engine 4 or any previous engines, may be familiar with the fracture tool in the static mesh editor. The fracture tool is something I find very fascinating because of the amount of things you can do with it, especially if you have a creative mind. So you may be asking yourself, what is this fracture tool and what does it do? Well for those that may not be familiar with it, the fracture tool is a tool that basically fractures your mesh. To put it in more simpler words, if you have a model such as a statue, the fracture tool can allow you to break pieces off of the model. In the occurrence where we do have a fracture mesh, whether it was a tree or statue, you could have it fracture in multiple ways to where it fits your game.

As you could see above with the fracture tool found under Tool>Fracture Tool you get these different color polygons in a form of a bounding box which represent fractures of your model that you can manipulate. Through clicking these "chunks" your allowed to say which chunk can be destroyed upon fracturing or even what chunk doesn't become destroyed. If you wanted to you could even re generate the chunks on the fracture model, adding more chunks or even depleting chunks off the model. So after actually creating a fracture mesh using the fracture tool you might ask yourself... how do we get this model to fracture? Well now here comes to cool part.

In the Static mesh Editor you have properties that you can manipulate, a few now being available to you after creating a fracture mesh which is the fragment min health and max health. With these properties you can basically set the health of your fracture mesh, so upon health being depleted, the model actually fractures. But this...This isn't. With a fracture mesh you can do much more than this and get a lot more created upon fracturing meshes. In the content browser actor classes tab, theres actually physics actors such as force actors and impulse actors that you can manipulate. With the impulse actor, we can actually make the fracture occurrence happen to the best of your liking. Upon development of my level called "Harmony" for my assignment in school, I actually manipulate two impulse actors such as the RadialImpulseActor and LineImpulseActor.

With the RadialImpulseActor, we can basically have a fracture mesh "fracture" upon an impulse that occurs spherically. As you can see above, the very middle red icon by the big tree is the RadialImpulseActor that actually intersects with all trees. So all trees will fracture due to that. Now the LineImpulseActor which is the red arrow icon all over the smaller tree actually shoot out an impulse that causes fracturing in a single line. So it's pretty cool because with that you can get very specific and break off certain pieces of a model. In my level Harmony, I actually use these actors to my advantage. My goal with these actors were to make it seem like the trees were slowing falling apart due to a fire...After a certain time passing, an explosion occurs where all trees eventually blow up the rest of the fractures left. Which causes the blue icon being the force actor to initiate. So after actually placing these into the level what happens? Well these actors are technically considered toggled on. So upon Level loaded, these meshes will fracture. Thats not what you want, or atleast what I wanted for this level. The great thing about these actors is you can actually toggle them off upon Level loaded in kismet. Upon hitting a trigger, you can toggle them back on. What I did with my level was upon Level loaded I set these actors to toggled off. Upon hitting the trigger I set one or even two LineImpulseActors to toggle, setting them off. Delaying a few seconds, toggling other LineImpulseActors, and continuing the process till I got to toggling the RadialImpulseActor. Upon toggling the RadialImpulseActor, I toggle the ForceActor which I'll be getting in to. The cool thing about going this route is being able to spawn particles like fire or even an explosion to make things more realistic.

The force actor is something a little different that actually doesn't apply to a fracture mesh. The force actor is an actor that manipulates Kactors through force. Kactors can be seen as dynamic static meshes that can be moved through physics. To create a Kactor is simple, all you have to do is right click the static mesh in your level and hit Convet>Kactor. So what I did with this RadialForceActor was actually upon my RadialImpulseActor becoming toggled, I toggled the RadialForceActor to actually have my Kactor Sign be thrown like if an explosion had occurred to create more realism.

So as you can see with it, you can get pretty creative when manipulating fracture meshes into your level, it just depends what fits your level best.

As you could see above with the fracture tool found under Tool>Fracture Tool you get these different color polygons in a form of a bounding box which represent fractures of your model that you can manipulate. Through clicking these "chunks" your allowed to say which chunk can be destroyed upon fracturing or even what chunk doesn't become destroyed. If you wanted to you could even re generate the chunks on the fracture model, adding more chunks or even depleting chunks off the model. So after actually creating a fracture mesh using the fracture tool you might ask yourself... how do we get this model to fracture? Well now here comes to cool part.

In the Static mesh Editor you have properties that you can manipulate, a few now being available to you after creating a fracture mesh which is the fragment min health and max health. With these properties you can basically set the health of your fracture mesh, so upon health being depleted, the model actually fractures. But this...This isn't. With a fracture mesh you can do much more than this and get a lot more created upon fracturing meshes. In the content browser actor classes tab, theres actually physics actors such as force actors and impulse actors that you can manipulate. With the impulse actor, we can actually make the fracture occurrence happen to the best of your liking. Upon development of my level called "Harmony" for my assignment in school, I actually manipulate two impulse actors such as the RadialImpulseActor and LineImpulseActor.

With the RadialImpulseActor, we can basically have a fracture mesh "fracture" upon an impulse that occurs spherically. As you can see above, the very middle red icon by the big tree is the RadialImpulseActor that actually intersects with all trees. So all trees will fracture due to that. Now the LineImpulseActor which is the red arrow icon all over the smaller tree actually shoot out an impulse that causes fracturing in a single line. So it's pretty cool because with that you can get very specific and break off certain pieces of a model. In my level Harmony, I actually use these actors to my advantage. My goal with these actors were to make it seem like the trees were slowing falling apart due to a fire...After a certain time passing, an explosion occurs where all trees eventually blow up the rest of the fractures left. Which causes the blue icon being the force actor to initiate. So after actually placing these into the level what happens? Well these actors are technically considered toggled on. So upon Level loaded, these meshes will fracture. Thats not what you want, or atleast what I wanted for this level. The great thing about these actors is you can actually toggle them off upon Level loaded in kismet. Upon hitting a trigger, you can toggle them back on. What I did with my level was upon Level loaded I set these actors to toggled off. Upon hitting the trigger I set one or even two LineImpulseActors to toggle, setting them off. Delaying a few seconds, toggling other LineImpulseActors, and continuing the process till I got to toggling the RadialImpulseActor. Upon toggling the RadialImpulseActor, I toggle the ForceActor which I'll be getting in to. The cool thing about going this route is being able to spawn particles like fire or even an explosion to make things more realistic.

The force actor is something a little different that actually doesn't apply to a fracture mesh. The force actor is an actor that manipulates Kactors through force. Kactors can be seen as dynamic static meshes that can be moved through physics. To create a Kactor is simple, all you have to do is right click the static mesh in your level and hit Convet>Kactor. So what I did with this RadialForceActor was actually upon my RadialImpulseActor becoming toggled, I toggled the RadialForceActor to actually have my Kactor Sign be thrown like if an explosion had occurred to create more realism.

So as you can see with it, you can get pretty creative when manipulating fracture meshes into your level, it just depends what fits your level best.

Tuesday, May 27, 2014

Rigging, Skinning and Animating a Non Playable Character

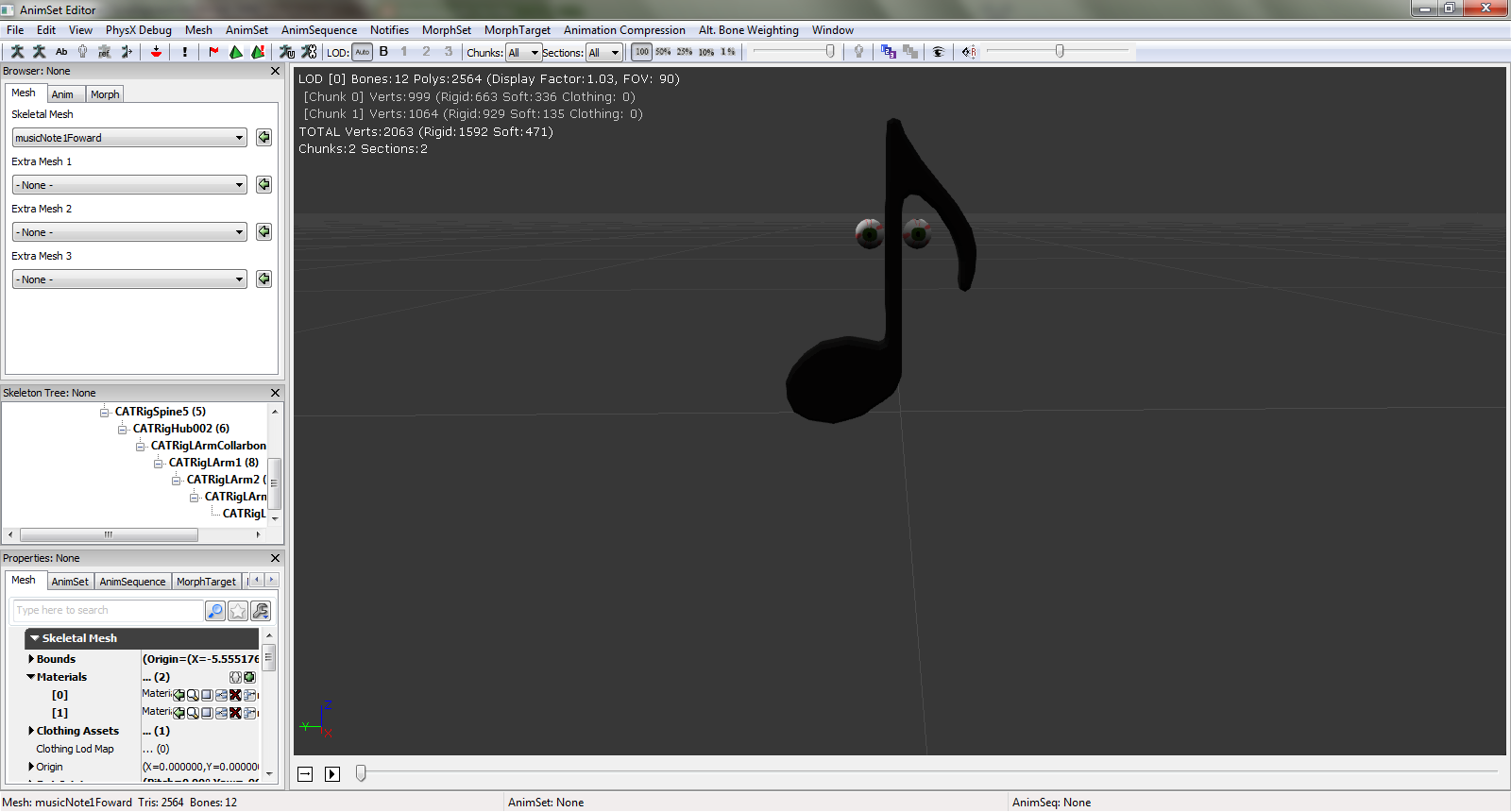

Have you ever played a video game and wonder to yourself how do they get players to move, how do they go about doing animations? When I was a kid I was fascinated with a lot of things video games had done, such as animating things in games but more importantly animating characters. From using 3ds max and Unreal engine a lot of questions became answered such as me finding out you didn't have to animate a character's movement in all directions. Recently after learning about Anim trees in Unreal Engine 3 I decided to take it as an opportunity to challenge myself and create my own Non Playable Character in order to gain more experience with establishing a base foundation for a character in a game. The Non playable character I decided to model was a music note that actually fitted in a level I was creating for one of my assignments in school.

After modeling the music note, I begin rigging it; starting off with a CAT Parent found in create>helpers>CAT Objects.

The CAT Parent can be seen as something that creates a starting bone for your model, being the Pelvis. With the Pelvis, you can create even more bones, such as legs, arms, or even a spine. Creating bones for your models allows animating of the model to be much more easy proficient because you don't have to grab the models by polygons, vertices or edges which could potentially create a lot of issues when animating such as stretching of the model and textures.

One of the things I learn from my own experience with using 3ds Max is that you can actually place the CAT Parent any where you want your model to begin animating at. For this model, I decided to place the CAT parent towards the beginning of the body because thats the part I want my model to animate at. After creating the CAT Parent I began creating the bones for the music note by starting off with Create Pelvis. Once creating the Pelvis I decided to add a spine because essentially the bones were taking place in the music notes body. After adding the spine I decided to add a left arm to my model to be able to animate the curve that was sticking out of the body.

Upon creating the base bones for my model a begun scaling the bones to get to almost exactly fit my model.

Doing so completed the rigging portion for my music note. When it comes to rigging a character for a game or even for an animation, the rigging portion is just for establishing base bones for that model that can be use for manipulating when animating. However when rigging your model, the bones you created aren't actually attach to the model. So the question is, how do we have the model became manipulated from the bones? Well thats where skinning comes in! Skinning your model is two things, attaching the bones to the model, and modifying weights to prevent any stretching in the textures. After completing the rigging of my model I begun skinning it by clicking the music note going to modify>Skin in the modify list. Once doing that you want to attach the bones to the model by going to the bones section and hitting "Add Bones".

Now once doing that I immediately stop with the skinning.....for the moment and went to animating because you can't really determine any stretching in your model until you see some sort of animation going about. Considering this model was an AI I begin animating the movements for it; starting with the left movement. Usually you can just click which ever bone you want to move for animating and go to the motion tab to create an animation layer and adjustment layer, for this instant I moved or rotated the second HUB which was the top most bone of the model. When it comes to animating things, what I like to do is hit the Auto key when in the adjustment layer and adjusting the bone to my liking. The great thing about the Auto key is that it keys whatever movements made in the animation timeline which I find much faster versus Set key. Considering I'm creating the movements for my music note in all directions I begun animating with the left movement first. For this model animating movements were very easy. All I had to do really was rotate the HUB0002 left in the y axis in a given frame in the animation timeline and rotate it right in the last frame of the time line.

Doing so completed a portion of my animations for the music note model, considering I still needed the right movement, back movement and front movement. Once completing one of the animations for the model I went back to skinning. Upon watching the model animate I actually notice the eyes stretching which is pretty strange because before rigging, skinning, and animating this model, I never knew the model could stretch when animating. I actually thought only the textures were possible in stretching. After noticing this I begun adjusting the weights on the bones, making sure while the animation was playing, no stretching were occurring.

As you can see above, the red capsule represents the weight of the bone and blue and yellow color represents the weight of influence. Before, the influence was actually touching the eye which was causing the eye to actually stretch. To prevent this two things could be done, rescaling the weight capsule or painting weights. Oddly enough, scaling down the weight actually wasn't reducing the influence so I had to paint the weights down to prevent the influence from touching the eye. After doing this completed the skinning portion for the model and thus I begun to continue animating the model's movement in other directions.

With this you begin to see how it is to rig, skin and animate a Non playable character for a game and different kinds of techniques you can use to actually do so. For me I found it pretty fun to do and a way to gain more experience when it comes to creating Non playable characters for a game.

After modeling the music note, I begin rigging it; starting off with a CAT Parent found in create>helpers>CAT Objects.

The CAT Parent can be seen as something that creates a starting bone for your model, being the Pelvis. With the Pelvis, you can create even more bones, such as legs, arms, or even a spine. Creating bones for your models allows animating of the model to be much more easy proficient because you don't have to grab the models by polygons, vertices or edges which could potentially create a lot of issues when animating such as stretching of the model and textures.

One of the things I learn from my own experience with using 3ds Max is that you can actually place the CAT Parent any where you want your model to begin animating at. For this model, I decided to place the CAT parent towards the beginning of the body because thats the part I want my model to animate at. After creating the CAT Parent I began creating the bones for the music note by starting off with Create Pelvis. Once creating the Pelvis I decided to add a spine because essentially the bones were taking place in the music notes body. After adding the spine I decided to add a left arm to my model to be able to animate the curve that was sticking out of the body.